Apple originally planned to introduce nudity detection in iOS 15.2.

While Apple has always proudly proclaimed that privacy is a top priority and that it does everything possible to respect users' privacy, the company announced in August a new development that has been criticised by many, but which, despite its good intentions, poses a serious threat by allowing total surveillance of iPhone owners.

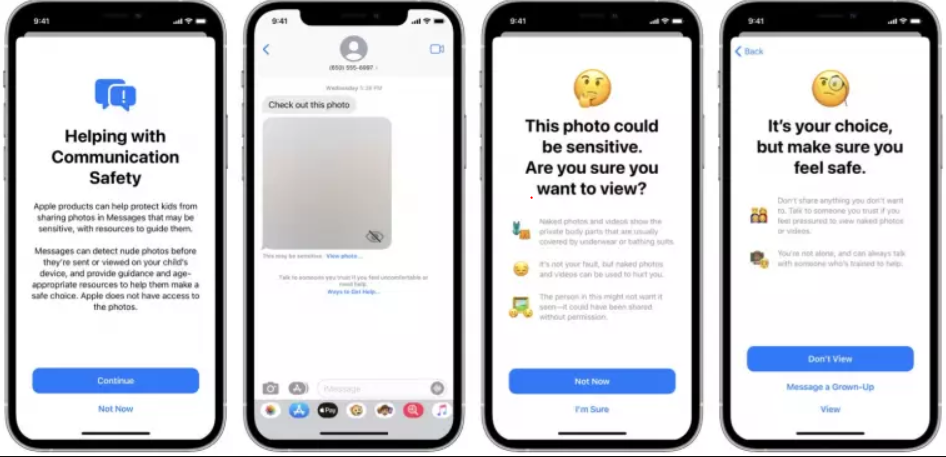

According to Apple confirmed following preliminary press reports that they are developing an algorithm that would scan all content on users' iPhones or iCloud accounts to filter out paedophile content. While the intention is indeed laudable, the implementation has raised a number of privacy concerns and the company has finally decided to take a step back and try a less direct method to protect children. Apple has finally developed a so-called nudity detection feature that uses machine learning to detect problematic content and then hides it from the child's view, who sees only a blurry image. The new protection feature also immediately notifies parents if a child's phone has received a naked picture or, conversely, if a naked picture has been sent from the child's phone.

The nudity detection feature was supposed to arrive with iOS 15.2, but in an unexpected twist of fate, parents didn't get the promised enhancement after all. Apple spokesperson Shane Bauer said of the incident respondedthat they have not changed their minds, they are still planning to develop the ominous feature.

![[149] HyperOS heti hibajelentés](https://helloxiaomi.hu/wp-content/uploads/2024/04/hyperosbugreportindex-218x150.webp)